The AI Game Changer You Haven't Heard Of

Discover the unanticipated force that's transforming artificial intelligence

The world of AI and machine learning has been captivated by the rapid advancements of large language models like GPT4 (my coauthor and editor for this article), but beyond the limelight lies an under hyped, yet groundbreaking technology, which is quietly poised to reshape the future of AI and your life.

Picture this: a world where AI systems become seamlessly integrated into your daily life, enabling intelligent devices that consume minimal power and respond with lightning-fast speed. Smart cities with connected infrastructure optimize traffic flow and energy usage in real-time, making urban living more efficient and sustainable. Wearable devices offer unprecedented battery life, monitoring our health and providing personalized recommendations, potentially extending our healthspan and reducing healthcare costs.

In this world, robots learn and adapt to their environments as naturally as humans, assisting us in healthcare, manufacturing, and beyond. Autonomous vehicles navigate the streets with ease, reacting to obstacles and changing conditions faster than any human driver could. Edge computing becomes the norm, empowering local devices to process vast amounts of data with unparalleled efficiency, transforming industries like agriculture, retail, and security.

This future won't be brought to life by ever larger data centers of power-hungry GPUs. Instead, an unassuming, groundbreaking technology harkens back to a bygone era, blending the old with the new, and redefining daily life as well as the winners and losers of the AI race.

Analog: From Vintage to Vanguard

This technology is analog computing, a once-forgotten approach now experiencing a renaissance in AI research.

Like fashion trends that cycle through decades of styles, updating and refining them for modern tastes, AI research is revisiting analog computing, a technology that was once overshadowed by digital advancements. Analog computing now offers unique advantages, such as energy efficiency and faster computation times, complementing and enhancing contemporary digital techniques.

What is analog and digital exactly?

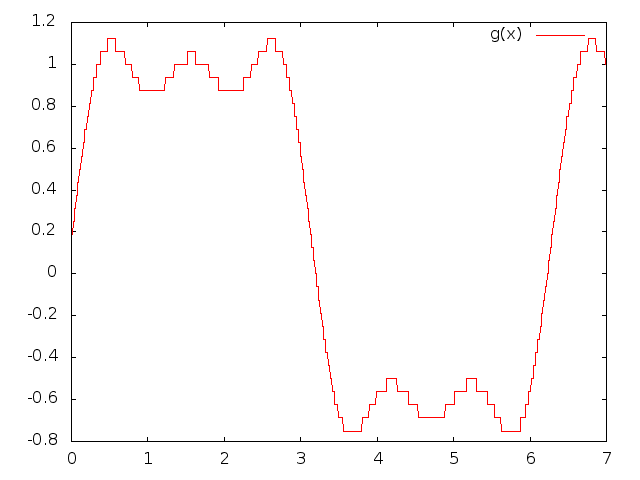

You can think of analog signals like a flowing river, with its water level constantly changing as it meanders through different terrains. Analog signals use this fluid nature to represent information through continuous variations, such as the pitch of a singer's voice or the changing shades of a sunset. However, like a message in a bottle floating downstream, analog signals can be affected by external factors and lose some of their original quality when traveling far distances or through various channels.

An analog signal: source

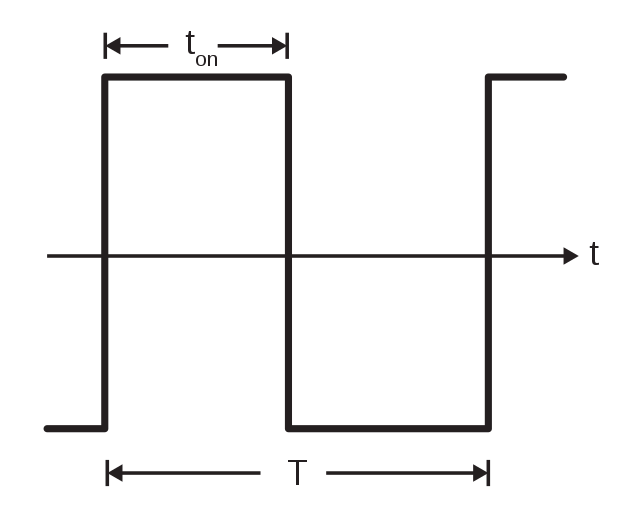

Now, imagine digital signals as a series of on-off light switches, where each switch represents a bit of information in the form of 0s and 1s. Instead of the smooth flow of a river, digital signals communicate information through distinct and separate values. It's like sending a message using Morse code, where each combination of dots and dashes conveys a unique meaning. Digital signals hold up better against external disturbances, ensuring that the message remains clear and accurate even when traveling long distances or through challenging conditions. This resilience is why digital signals are the backbone of modern electronic devices like computers and smartphones.

A digital signal: source

In the context of circuits, analog circuits are like an orchestra, with each component working harmoniously to process and amplify the continuous waveforms of analog signals. These circuits involve various components, such as resistors, capacitors, and operational amplifiers, all fine-tuned to work together in processing the fluid and ever-changing analog signals. However, like an orchestra playing in a busy city square, the performance of these circuits can be affected by external noise and interference. Thus, maintaining the fidelity of the information in analog circuits can be challenging, especially when dealing with complex signals or when the circuits need to perform under varying conditions.

Digital circuits, on the other hand, are more like a precision marching band, with each member executing specific and discrete commands in a synchronized manner. Digital circuits consist of components like logic gates, flip-flops, and microprocessors that work together to process the on-off nature of digital signals. Their binary language allows for a clear and consistent performance, even in the face of external disturbances. Just as a marching band can maintain its formation and rhythm despite distractions, digital circuits can reliably process and transmit information with minimal degradation. The robustness and predictability of digital circuits have made them the go-to choice for modern electronics, enabling the rapid growth and innovation of computing and communication technologies.

Analog’s AI Advantages

Here is what you need to know:

Analog Mimics the Human Brain: Analog computing for AI is heavily inspired by the structure and function of the human brain, making use of continuous signals to process information in a way that resembles the brain's neural networks. This brain-inspired approach, known as neuromorphic computing, aims to create AI systems that can learn and adapt in a more natural and efficient manner.

Analog is Energy Efficient: One of the key advantages of analog computing for AI is its energy efficiency. Analog and neuromorphic systems can perform complex calculations with significantly lower power consumption compared to traditional digital systems, such as GPUs. This efficiency is particularly valuable for edge computing applications, where energy constraints are critical.

Analog is Well-Suited for Real-Time Processing: Analog and neuromorphic systems can process information faster and with lower latency than their digital counterparts, making them well-suited for real-time AI applications. The ability to perform multiple calculations simultaneously using continuous signals enables faster response times, which can be crucial for applications such as robotics, computer vision, and autonomous vehicles.

Analog is Noise Tolerant and Robust: Analog computing is inherently more tolerant of noise and imprecision, allowing for more robust AI systems that can handle uncertain or noisy input data. This characteristic is especially beneficial in situations where data quality may be compromised, such as sensor data from IoT devices or robotics applications.

Analog is not a Silver Bullet: While analog and neuromorphic computing offer several advantages for AI, there are still challenges to be addressed. The design and fabrication of analog and neuromorphic chips can be complex, and the field currently lacks standardized software and programming tools.

Deep Dive on Analog in AI

Why now?

The resurgence of analog computing in AI stems from the limitations of digital computing, particularly in terms of energy efficiency and the ability to handle certain types of problems. As AI applications have expanded, researchers have been seeking new approaches to improve performance and efficiency, paving the way for analog and neuromorphic computing technologies to re-enter the scene.

A Brief History

Analog computers have a long history, dating back to ancient times with devices like the Antikythera mechanism and the astrolabe. In the 20th century, electronic analog computers were widely used for various applications, such as solving differential equations and modeling physical systems. However, with the advent of digital computers and their rapid growth in capability, analog computers gradually fell out of favor.

The interest in analog computing for AI started to re-emerge in the 1980s with the development of neural networks and the idea of using analog components to simulate brain-like behavior. This eventually led to the concept of neuromorphic computing, which aims to emulate the structure and function of biological neural systems using electronic components.

During the 1980s, the foundations of neuromorphic computing were established with pioneering work by researchers like Carver Mead, who coined the term "neuromorphic." He believed that the future of computing lay in building systems that closely mimicked the architecture and efficiency of the human brain. In this decade, the development of neural networks and the exploration of brain-inspired models of computation led to the creation of early neuromorphic systems and laid the groundwork for future innovations.

The 1990s saw a surge in research on artificial neural networks and the development of new learning algorithms, such as backpropagation. As the understanding of biological neural networks grew, researchers began to design more advanced neuromorphic systems. During this period, the first silicon retina and silicon cochlea were developed, paving the way for more sophisticated neuromorphic sensors and processing systems. However, the computational power and memory limitations of the time posed significant challenges to the development of large-scale neuromorphic systems.

In the 2000s, advancements in VLSI (Very Large Scale Integration) technology enabled the development of more complex and efficient neuromorphic chips. Researchers focused on creating specialized hardware to address the limitations of traditional digital computing for artificial intelligence tasks. The development of the IBM TrueNorth chip, which featured one million programmable neurons, marked a significant milestone in neuromorphic computing during this decade.

The 2010s witnessed a rapid expansion of interest and investment in AI, driven by the success of deep learning and the availability of large-scale datasets. Neuromorphic computing continued to advance, with researchers developing more efficient and powerful hardware, such as Intel's Loihi chip and the SpiNNaker project by the University of Manchester. This decade also saw the rise of analog AI companies, like BrainChip and Mythic, who began to commercialize neuromorphic technologies for various applications.

The current decade has seen the growing convergence of analog and digital technologies, with researchers exploring hybrid computing models to leverage the strengths of both approaches. As the limitations of digital computing become more apparent, particularly with respect to energy efficiency, the potential of analog AI to address these challenges has garnered significant interest. This decade is poised to witness further advancements in neuromorphic computing, the development of novel materials and technologies, and the widespread adoption of analog AI in various industries.

Different Categories of Analog AI Technologies

Neuromorphic Computing: This approach focuses on building hardware that imitates the structure and function of the human brain. Neuromorphic systems often use analog components to represent neurons and synapses, enabling more efficient processing of information compared to traditional digital systems.

Analog Accelerators: Analog accelerators are specialized hardware components designed to perform specific AI tasks, such as neural network processing or optimization, using analog circuits. These accelerators can be integrated with digital systems to improve overall performance and efficiency.

Mixed-Signal Computing: Mixed-signal computing combines analog and digital components in a single system, leveraging the strengths of both technologies. This hybrid approach can offer greater flexibility and efficiency, particularly for applications that require both continuous and discrete data processing.

Memristor-Based Computing: Memristors are a type of analog component that can store and process information simultaneously. They can be used to build neuromorphic systems or other types of analog computing architectures, offering potential advantages in terms of power efficiency and processing speed.

Specific examples and applications

Neuromorphic Computing

IBM's TrueNorth chip: This neuromorphic chip consists of 1 million programmable neurons and 256 million programmable synapses, offering high efficiency and low power consumption.

Intel's Loihi chip: Loihi is a neuromorphic research chip designed for energy-efficient AI applications, featuring 130,000 neurons and 130 million synapses.

Specific Applications

Neuromorphic Engineering for Robotics: can be used to develop more efficient and responsive robots that can learn and adapt to their environment in real-time.

Neuromorphic chips in Edge Computing: can be utilized in edge devices, such as smartphones and IoT devices, to enable AI applications with low power consumption and minimal latency.

Computer vision: Neuromorphic vision sensors can process visual data faster and more efficiently, enabling applications like real-time object recognition and tracking.

Analog Accelerators

Mythic's analog compute-in-memory technology: Mythic's technology performs AI processing using analog circuits within memory components, significantly reducing power consumption and increasing processing speed.

Hailo's Hailo-8 chip: This AI accelerator chip is designed for edge devices and incorporates analog techniques to optimize performance and power efficiency.

Specific Applications

Image and video processing: Analog accelerators can be used to perform image and video processing tasks more efficiently, such as image recognition or video compression.

Natural language processing: Analog accelerators can improve the performance of NLP tasks like sentiment analysis, language translation, and speech recognition.

Autonomous vehicles: Analog accelerators can be integrated into autonomous vehicle systems to perform real-time sensor data processing and decision-making with minimal power consumption.

Mixed-Signal Computing

Eta Compute's low-power neuromorphic computing solutions: Eta Compute combines analog and digital techniques to create AI processors that consume ultra-low power while maintaining high performance.

Specific Applications

Wearable devices: Mixed-signal computing can be used in wearable devices to perform AI tasks with low power consumption, enabling longer battery life and improved functionality.

Environmental monitoring: Mixed-signal computing can be employed in sensor networks for real-time environmental monitoring, such as air quality or water level measurement, with minimal energy requirements.

Memristor-Based Computing

Knowm Inc.'s memristor-based neuromorphic computing technology: Knowm's technology leverages memristors to create energy-efficient neuromorphic systems capable of learning and adaptation.

Specific Applications

Pattern recognition: Memristor-based computing can be used for pattern recognition tasks, such as facial recognition or handwriting recognition, with higher efficiency than traditional digital systems.

Data storage: Memristors can be used to develop non-volatile memory systems that retain information even when power is lost, offering potential advantages for energy efficiency and data retention.

The Fusion of Analog and Digital: A Glimpse Into the Future

Standing at the cusp of an AI revolution, it's essential to monitor the developments that will shape the industry and pave the way for a new generation of AI applications. Here are some key things to be on the lookout for:

Emerging Research and Breakthroughs: Stay informed about the latest research findings in the field of analog and neuromorphic computing. Follow leading research institutions, such as MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) or Stanford's Brains in Silicon Lab, to stay up to date with their work and explore potential applications of their discoveries.

New Collaborations and Partnerships: Watch for collaborations between academia, startups, and established tech giants that could drive innovation and accelerate the adoption of analog AI technologies. Strategic partnerships can facilitate the sharing of resources and knowledge, helping to overcome the current challenges faced by the industry. To do this check out Venture Beat AI, or this tech crunch query.

Industry Adoption and Integration: Keep an eye on how industries begin to adopt and integrate analog AI technologies into their products and services. As these technologies become more mature and accessible, a wide range of applications will emerge, transforming industries such as agriculture, retail, security, and healthcare. To accomplish this look at AI Business, IoT World Today, Towards Data Science, and O'Reilly Radar.

Policy and Regulatory Developments: As the analog AI industry grows, it's important to monitor any policy or regulatory changes that could impact its development. Government support and investment can play a crucial role in driving the growth of the sector and addressing challenges related to standardization and intellectual property rights. Check out the Global Partnership on Artificial Intelligence, and the UNICRI Centre for AI and Robotics.

Advances in Complementary Technologies: Analog AI is poised to benefit from advances in other areas of technology, such as materials science, sensor development, and quantum computing. Keep an eye on these fields, as breakthroughs could directly impact the performance and capabilities of analog AI systems. Monitor this at IEEE Spectrum, Quantum Computing Report, and Nature’s Nanotechnology section.

Educational and Training Opportunities: As the analog AI industry expands, demand for professionals with expertise in both analog and digital technologies will grow. Stay informed about educational programs, workshops, and online courses that can help you develop the necessary skills to excel in this exciting field. Coursera, and the firehose of directly reading research papers are both good candidates for staying up to date.

Like a surfer waiting for the perfect wave, staying informed about the developments in AI can prepare you and your friends for the opportunities its creating. Change is constant, fortunately so is our free newsletter! Subscribe and stay informed:

Disclaimer

In no event will Prdctnomics or any of the Prdctnomics parties be liable to you, whether in contract or tort, for any direct, special, indirect, consequential, or incidental damages or any other damages of any kind even if Prdctnomics or any other such party has been advised of the possibility thereof.

The writer’s opinions are their own and do not constitute financial advice in any way whatsoever. Nothing published by Prdctnomics constitutes an investment recommendation, nor should any data or Content published by Prdctnomics be relied upon for any investment activities.

Prdctnomics strongly recommends that you perform your own independent research and/or speak with a qualified investment professional before making any financial decisions.